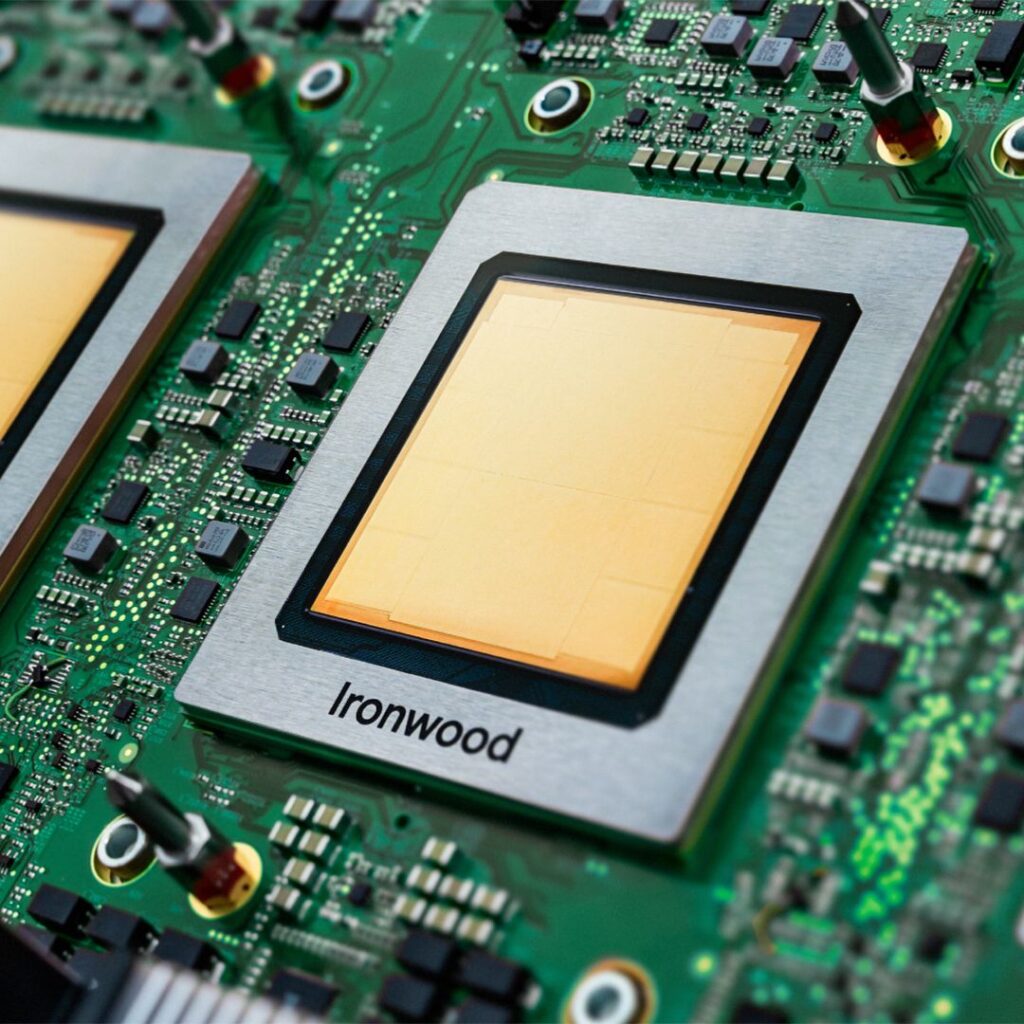

Google LLC has officially introduced its latest Tensor Processing Unit (TPU), codenamed Ironwood, marking a major advance in the company’s AI hardware strategy. The seventh-generation TPU was announced at Google’s annual Cloud Next conference and is built to handle large-scale machine learning and generative AI workloads, including foundation models for tools like Google Gemini and other next-generation AI systems.

Performance and Scalability

According to Google, Ironwood delivers substantial performance and efficiency improvements over its predecessor, TPU v5p. The chip is optimized for training and inference tasks at hyperscale, targeting enterprise customers developing and deploying AI models through Google Cloud.

A Direct Challenge to Nvidia

The launch positions Google as a direct competitor to Nvidia, which currently dominates AI training infrastructure with its H100 and upcoming Blackwell GPU architectures. Google Cloud CEO Thomas Kurian emphasized the growing demand for AI compute resources: “AI workloads are growing exponentially, and developers need more options. Ironwood delivers the speed, efficiency, and scale required for the next generation of AI innovation.”

Supporting Generative AI

Google’s TPU architecture has powered major AI projects such as DeepMind’s AlphaFold and large language models behind Gemini. Ironwood aims to provide cost-effective access to high-performance AI computing, reducing dependency on external GPU suppliers and reinforcing Google Cloud’s position in the enterprise AI market.

Implications for the AI Chip Industry

Industry analysts note that Ironwood could shift the AI hardware landscape. “Google’s Ironwood is not just a chip; it’s a statement that Nvidia won’t remain unchallenged forever,” said technology analyst Priya Malhotra of The Times of India. The move reflects Google’s broader strategy of vertical integration, combining custom chips, data centers, software, and cloud services to deliver end-to-end AI solutions.

As global demand for AI computing surges, competition among Google, Nvidia, AMD, Intel, and other chipmakers is expected to intensify, shaping the infrastructure that powers the world’s most advanced AI systems.