High-Bandwidth Memory becomes the backbone of the AI era as industries increasingly rely on AI and data-intensive workloads. HBM technology addresses the long-standing memory wall problem, delivering far higher throughput than conventional DRAM and enabling the fastest AI models to run efficiently at scale.

Market Shift Driven by SK Hynix

SK Hynix has surged ahead in the competitive HBM market, surpassing Samsung to capture a dominant share. High-Bandwidth Memory becomes the backbone of the AI era with HBM revenue rising from 5% of DRAM sales in late 2022 to over 40% by early 2025. This reflects the rapidly growing importance of AI-focused memory demand across cloud and hyperscale data centers.

Technical Advantages of HBM

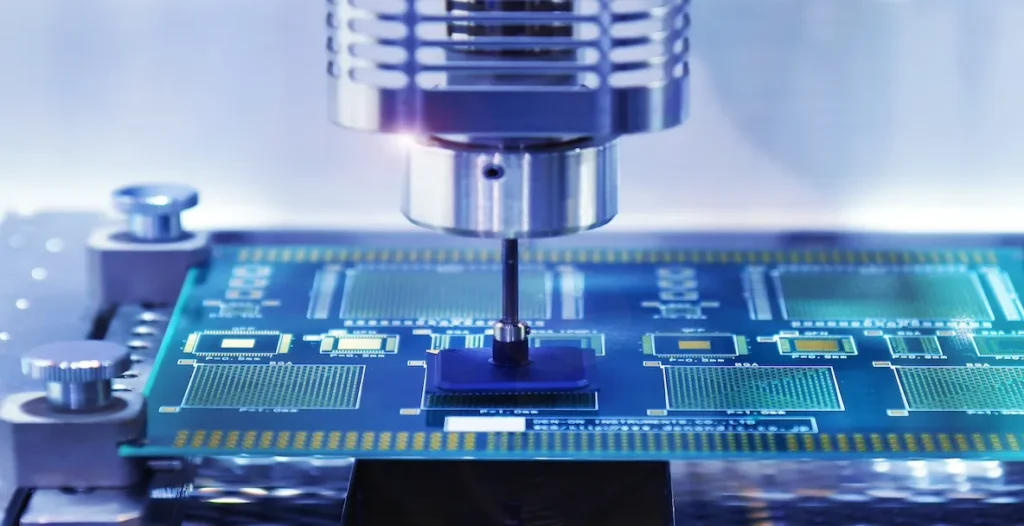

The key to HBM’s performance is its stacked architecture, connecting multiple memory dies with through-silicon vias (TSVs). High-Bandwidth Memory becomes the backbone of the AI era by enabling bandwidth levels unattainable by conventional DDR memory, which is critical for GPUs, TPUs, and AI accelerators where data movement speed dictates overall performance.

Future Prospects: HBM4 and Integrated Logic

Next-generation HBM4 is expected to integrate logic chips directly within the memory stack. High-Bandwidth Memory becomes the backbone of the AI era with HBM4 promising further performance gains, efficiency improvements, and the potential to maintain its role as the primary memory solution for AI hardware for the next five years.

Strategic Importance in AI Supply Chains

Demand from cloud providers, chipmakers, and hyperscale data centers continues to soar. High-Bandwidth Memory becomes the backbone of the AI era as HBM technology transitions from a specialized component to a strategic linchpin essential for scaling AI operations, reducing bottlenecks, and enabling next-generation model deployment.

Conclusion

The rise of HBM illustrates how hardware innovation underpins AI’s growth trajectory. High-Bandwidth Memory becomes the backbone of the AI era by ensuring data moves at the speed modern workloads demand, cementing its role as a foundational element in the AI ecosystem.

For detailed specifications and updates, visit SK Hynix.